Imaging of Matter

Microscopy: Researchers call for greater awareness of AI challenges

3 October 2025

Photo: Nature, reprint with permission

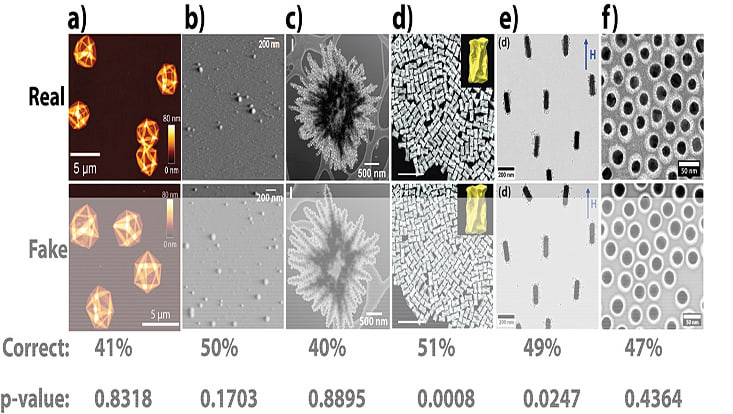

There has been enormous progress in generative artificial intelligence (AI). Even highly sophisticated microscope images can now be faked with ease. These manipulated images are indistinguishable from real ones, even to experts. In a recent publication in Nature Nanotechnology, an international team of nanoscientists discusses strategies for safeguarding the integrity of their discipline.

Microscopy methods in nanomaterials science are used to determine crucial properties such as uniformity, size, shape, crystallinity, composition and rigidity: all of which are useful in drawing valid conclusions in a wide range of applications, such as energy storage, catalysis and nanomedicine. However, in recent years, scientists have observed instances of scientific misconduct involving microscopy methods that give a wrong impression of sample quality. “This problem has recently become more serious because generative artificial intelligence is now capable of creating customized fake derivatives of any image-based technique,” says Wolfgang Parak, a professor at the Institute for Nanostructure and Solid State Physics at the University of Hamburg and a researcher in the Cluster of Excellence “CUI: Advanced Imaging of Matter”. He and his co-authors recognize the urgent necessity of addressing this issue.

To illustrate their point, the team conducted an anonymous survey among scientists, showing each participant either a real microscopy image or a version generated by AI. Two hundred and fifty participants were asked to indicate whether the images were “real”, “fake” or “unsure”. The results confirmed that the scientists were unable to discern real nanomaterial images from most AI-generated fakes.

Consequences for science

This has consequences, such as rendering traditional approaches to detecting scientific misconduct obsolete. The authors advocate reinforcing best practices against image manipulation in general. For example, they suggest providing raw data files alongside publications, as they believe it would be significantly more challenging for generative AI to produce such files. Since raw data files can often only be accessed by specific software that is licensed to the instrument that was used for the measurements, the authors emphasize the need to develop software that can read all manner of raw data files.

Another significant development would be to use AI to identify AI-generated images. This idea is already gathering momentum across the scientific community.

Regarding nanomaterial images, the team emphasizes the importance of changing perspective to one that appreciates imperfections and acknowledges that not all nanomaterials or assemblies are perfect. They also stress the need to explicitly state whether polished images are being produced for social media.

Lastly, the team collectively encourages replication studies of highly cited publications that announce new materials. Parak: “It is important for our community to tackle unethical AI use and accept it as a potential and growing problem.”

Original Publication

Nadiia Davydiuk, Elisha Krieg, Jens Gaitzsch, Patrick M. McCall, Günter K. Auernhammer, Mu Yang, Joseph B. Tracy, Sara Bals, Wolfgang J. Parak, Nicholas A. Kotov, Luis M. Liz-Marzán, Andreas Fery, Matthew Faria & Quinn A. Besford

The rising danger of AI-generated images in nanomaterials science and what we can do about it

Nature Nanotechnology 20, 1174-1177